Matching thousands of Latin-based names to their Japanese equivalent is a very specific and troublesome problem. It is one of many “edge cases” (i.e., a specific name matching problem) our customers are trying to overcome.

Traditionally, customers would add special rules, overrides, and other manual configurations to Rosette to solve problem cases as they appeared. However, deep learning now solves whole categories of match issues.

A recently released deep learning model addresses the specific case of matching Latin-based names to their Japanese equivalent. Japanese writes foreign borrowed words in Katakana (a syllabic alphabet), such as “ルーシー・ポートマン” for “Lucy Portman.”

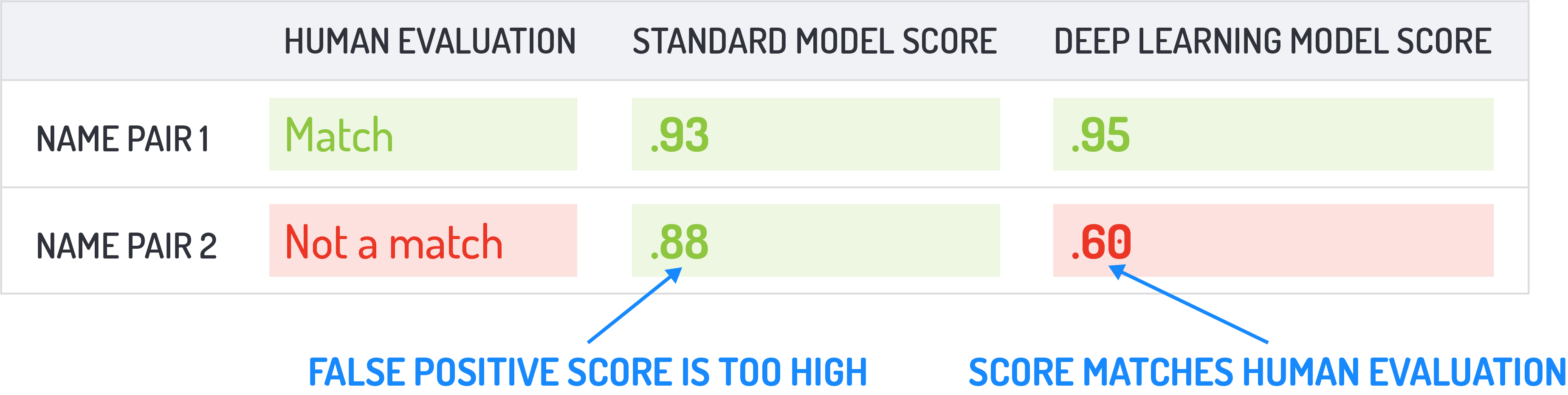

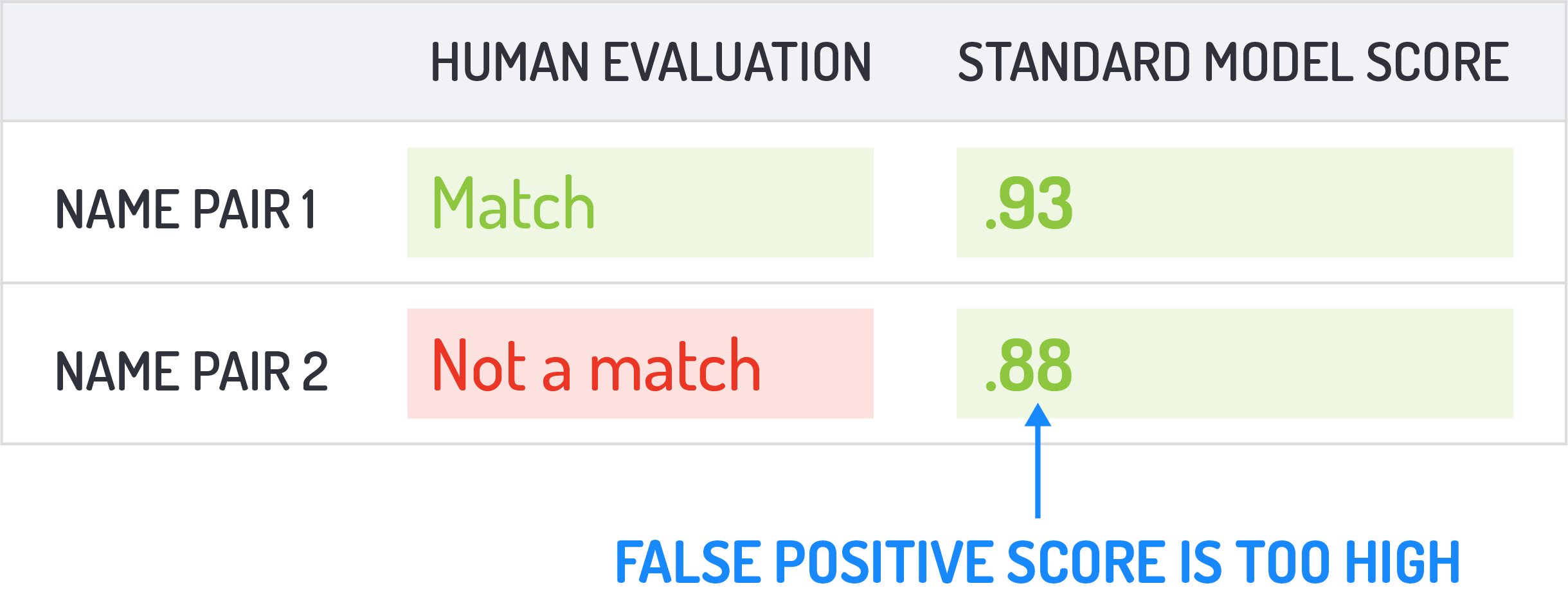

While Rosette had an algorithm to match these types of name pairs, similar names got very high similarity scores, but given a name pair that was less similar, the score didn’t go down as much as expected, thus generating false positives.

Led by Babel Street’s Chief Scientist Kfir Bar, the R&D team looked for a better solution. The team turned to deep learning, using a neural architecture that is similar to what is used for machine translation, and broke the text of each language into characters. Then using a collection of name pairs — the same data used to train the traditional machine learning model (Hidden Markov Model or HMM) — the team trained up a deep learning neural network.

“HMM is very local. When you want to predict the next character, you only consider information from the previous character and maybe the previous prediction, but just one step back,” Bar said. “But with deep learning, you can capture any context you want and the network will decide automatically what information to capture in order to succeed in this task.

“In deep learning the input is a pattern, but instead of the human engineer deciding what features should be encoded, the neural network decides on its own the important information to encode, based on what it learned in training.”

Now given a Katakana name, Rosette’s new deep learning model (a sequence-to-sequence model) generates a lattice of name options (name variations) with weights. A decoder scores each name option to indicate how similar they are. The neural network learns using a recurrent neural network architecture called long short-term memory (LSTM). For learning, Rosette uses bidirectional LSTM, in which the information from each previous character is brought forward to the next one. It also looks at each name from left to right and right to left.

Bar pointed out that with traditional machine learning, the human engineer is choosing what features the model should learn and how to weigh the various features, whether it is “parts of speech” or “all the words in the training corpus.”

“But with deep learning, it will figure out the features automatically. That is the black box part of deep learning,” Bar said. “Deep learning learns from raw data, while a traditional machine learning algorithm learns from ‘shaped data’ that a human has formatted in an understandable way.”

Although the deep learning algorithm is slower than the former HMM model, the extra processing time is more than justified because it significantly increases accuracy in matching Katakana names. In processing such data, one customer saw a 75% accuracy improvement in reduced false positives due to more accurate match scores.